The Tech Buffet #8: Flowise - An Interface To Build and Test LLMs Apps Easily

With no-code, directly from the browser.

Hello there, I’m Ahmed Besbes 👋

I write The Tech Buffet to share weekly practical nuggets to improve your code and ship ML applications faster. Consider subscribing to access exclusive content.

Today, we’ll explore an open-source no-code tool that helps you build and visualize LLM applications.

If you spend time designing RAGs, chatbots, or sophisticated agents, this tool will speed up your prototyping.

↳ Here’s the agenda 📋

A quick intro of the solution

Setup and configuration

Demo: Create a chatbot over PDFs

My honest opinion

Flowise: an interface for building LLMs

The LangChain framework made it very easy to build LLM applications.

By abstracting objects like chains, retrievers, or document loaders, it gave us the power to compose complex workflows that solve interesting tasks: chatbots over documents, semantic search engines, personal assistants, etc.

Flowise went further to ease this process by providing an interactive UI on top of LangChain.

By using Flowise, it’s now possible to build the same chains directly from the browser by selecting the right components and connecting them.

It does look good, doesn’t it? That’s the power of LangChainJS combined with TypeScript and JavaScript.

Setup and configuration

To start Flowise on your computer you can either install it from source code or use it in a Docker container. (I recommend the second option)

↳ Option 1— Install it from source

To install Flowise, make sure you have NodeJS >= 18.15.0 set up on your computer and run this command:

npm install -g flowiseAfter it’s installed, you can start Flowise with this command:

npx flowise startYou can also specify the username and password as arguments:

npx flowise start --FLOWISE_USERNAME=user --FLOWISE_PASSWORD=1234The app is now accessible on http://localhost:3000

↳ Option 2— Run Flowise in a Docker container

To run Flowise using Docker, go to the Docker folder at the root of the project.

Then copy the .env.example file, paste it into the same location, and rename it to .env.

Run this Docker command to start the Flowise server:

docker-compose up -dWith that, the app is accessible on the same URL: http://localhost:3000

Ok this is cool, but show me the app now! 🤯

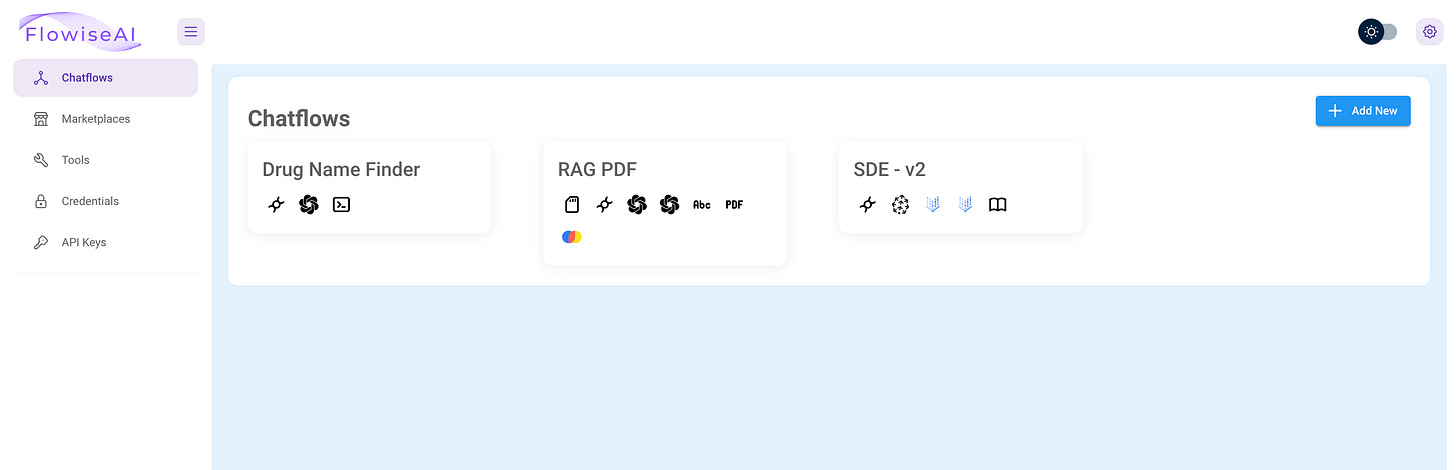

When you open Flowise, you’ll first see a screen with the ChatFlows you’ve already created (a ChatFlow is a workflow you build with Flowise)

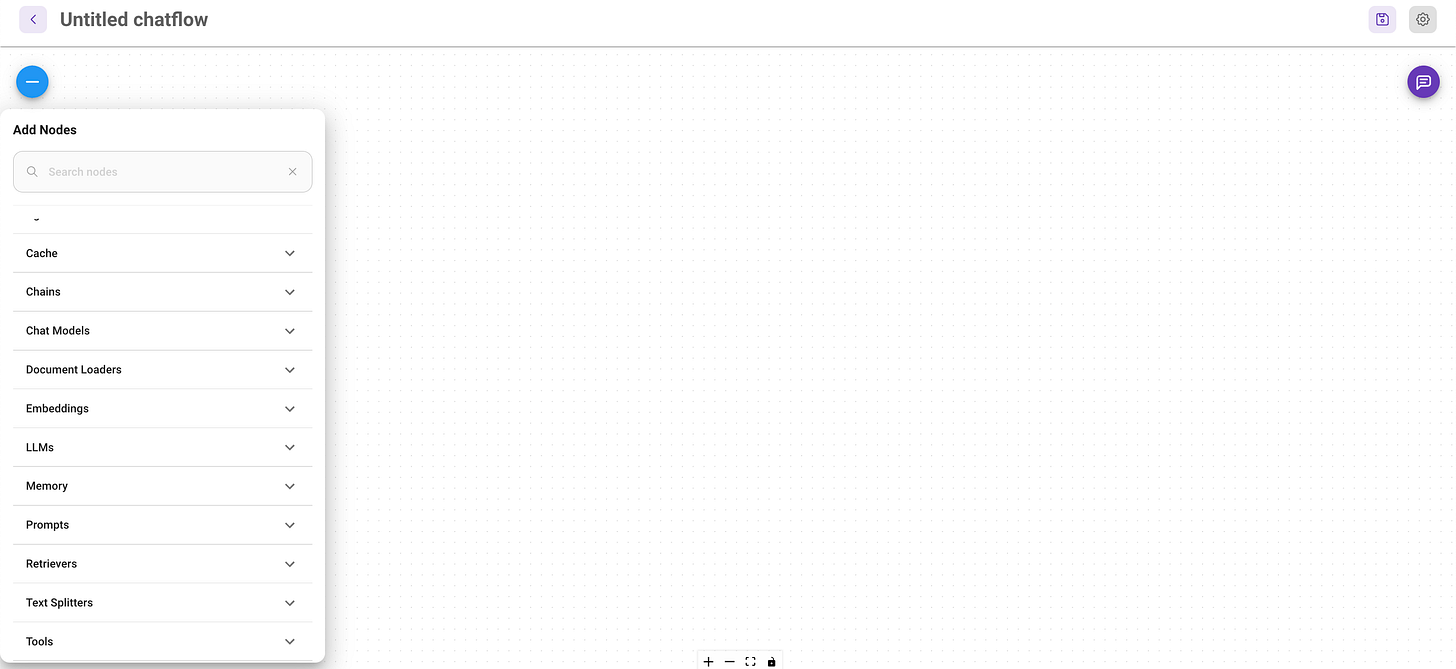

To create a ChatFlow that represents an LLM chain, click on Add New, then click on the plus sign on the left.

This will open up a list of nodes that you can drag and drop to the interface and connect them with each other.

If you’re already familiar with LangChain, these nodes correspond to the same components you already use:

Document Loaders

Embeddings

Prompts

Retrievers

…

If you click on Document Loaders for example, you’ll find many options that you can pick depending on your data format (CSV, PDF, Markdown, etc.)

If you click on Embeddings, you’ll find different embedding providers as well. You can find for example AWS, GCP, Azure, OpenAI and HuggingFace

As for the vector stores, you also have a lot of options (Chroma, Weaviate, Pinecone …)

With Flowise, you can either feed your vector store directly in a chatflow or you can load an existing one that you created elsewhere. That’s up to you.

Demo: Create a chatbot over PDFs

Here’s a common use case: your company asks you to build a chatbot over internal PDF documents (contracts? invoices? research papers?) to gather insights and test LLM capabilities.

Building this prototype in Flowise is easier than ever.

You first start by importing the PDF loader.

Your PDF is quite long so you need to split it into chunks. Therefore, you need to import a Character Text Splitter. You can specify the separator (\n), the chunk size (500), and the chunk overlap (150).

This text splitter must be connected to the PDF file loader.

The chunked documents must now be inserted in a vector store. Let’s import one.

In this demo, we’ll use Chroma.

As you see, the Chroma database is missing the embedding model so let’s add that.

I’m going to pick one from OpenAI.

Since we need an API key to perform calls to OpenAI, we can add and save these credentials in Flowise. (They’re encrypted)

Now the last part: we need to create a Conversational Retrieval QA chain that takes as inputs a chat model and the Chroma database.

So let’s go ahead and add those.

Here’s the final flow. The first time I made it, it took me probably 3 minutes.

That’s pretty much it.

In this demo, I’m going to chat with the first chapter of this book: Effective Python: 90 Specific Ways to Write Better Python

To do this, you first need to upload the book directly to the PDF loader.

To chat with it, you simply need to click on the purple icon (on the right) to open an interactive chat.

Here’s an example question:

How awesome is that?

Notice that Flowise can also display the items the LLM used when generating the answer. This helps diagnose the results.

and this doesn’t stop, here comes the best part:

Once your chatbot is built and tested, you can have access to an endpoint to interact with it programmatically and embed it in other applications.

It’s as simple as writing these lines of code.

Before I move to the last section of this post, I wanted to mention that this demo is built locally. In a real setting though, you’d need to deploy Flowise on the cloud so that teammates can easily collaborate on the same ChatFlow.

Check this doc to learn more about the deployment options.

My honest opinion

Flowise is a powerful tool that makes prototyping chains very easy. It provides a nice UI to assemble blocks together and quickly test them.

I also like the ability to embed the ChatFlow by using the provided endpoint.

As this solution is relatively new, there are some improvements that could be made.

An improved stacktrace to diagnose bugs

Displaying a status to the user when a heavy workload (e.g. embedding) is being performed or when a large file is being ingested

A more detailed documentation

Will I use this solution?

In the short term, absolutely.

However, my commitment in the long run is less certain. This hesitancy is rooted in the fact that Flowise is closely intertwined with LangChain's core components. Although LangChain is a great package, I occasionally find its architecture overly complex. At times, constructing chains or RAGs from the ground up seems more practical. Hence, I might consider exploring alternative frameworks in the future.

Perhaps, if the community someday reaches a consensus on a standardized approach for representing LLMs, solutions like Flowise could enjoy broader adoption.

That’s all for today. I hope you discovered a tool that would make your life easier for building and prototyping.

Thanks for reading,

Until next time! 👋

Ahmed