The Tech Buffet #15: Build and Evaluate LLM Applications with TruLens

Understand why your LLMs responded that way and take actions to prevent hallucinations

Hello 👋 this is Ahmed, your host at The Tech Buffet. If you’re new to this newsletter, it’s your go-to place for ML engineering insights, coding best practices and tool reviews.

You’ll also see a lot of content about LLMs here. No hype, no BS. I promise.

This issue is about a tool I started using recently to track the performance of RAG-based applications. It’s called TruLens.

TruLens is an open-source package that helps you evaluate and monitor your LLM applications.

It comes particularly in handy if you build RAGs and struggle to find their optimal parameters.

To help with this, TruLens tracks your RAG experiments, compares them with custom metrics, diagnoses errors, and allows you to land on an optimal configuration.

In this issue, we’ll see how this works for a simple and practical use case: Answering questions about Albert Einstein given a short text about him.

This is a practical tutorial that you can follow step by step. (it’s also best read on a wide screen like a laptop or a tablet).

Let’s dive in.

Disclaimer: I’m not affiliated with TruLens and this is not a sponsored post.

This issue is broken into 5 sections.

1️⃣ Setup

To prepare the environment and the data needed to build the RAG, we must go over some preliminary steps.

We first install these libraries:

!pip install trulens_eval==0.18.1 chromadb==0.4.18 openai==1.3.1We set an OpenAI key as an environment variable to be able to use GPT-3.5 as LLM.

import os

os.environ["OPENAI_API_KEY"] = <key>👉 You can also plug in any open-source LLM from HuggingFace. Learn more about that here.

We define a simple text about Albert Einstein to build our RAG on:

einstein_info = """

Albert Einstein (/ˈaɪnstaɪn/ EYEN-styne;[4] German: [ˈalbɛɐt ˈʔaɪnʃtaɪn] ⓘ; 14 March 1879 – 18 April 1955)

was a German-born theoretical physicist who is widely held to be one of the greatest and most influential

scientists of all time. Best known for developing the theory of relativity, Einstein also made important

contributions to quantum mechanics, and was thus a central figure in the revolutionary reshaping of the

scientific understanding of nature that modern physics accomplished in the first decades of the twentieth century.[1][5]

His mass–energy equivalence formula E = mc2, which arises from relativity theory, has been called "the world's

most famous equation".[6] He received the 1921 Nobel Prize in Physics "for his services to theoretical physics,

and especially for his discovery of the law of the photoelectric effect",[7] a pivotal step in the development

of quantum theory. His work is also known for its influence on the philosophy of science.[8][9] In a 1999 poll

of 130 leading physicists worldwide by the British journal Physics World, Einstein was ranked the greatest

physicist of all time.[10] His intellectual achievements and originality have made the word Einstein

broadly synonymous with genius.[11]

"""And finally, we create a chromadb vector store in memory to store the embedding of the previous text.

2️⃣ Build a custom RAG with instrumentation

In this step, we define a custom RAG in a Python class and add TruLens instrumentation to each of its methods.

TruLens provides different instrumentation frameworks to allow you to inspect and evaluate the internals of your application and its associated records. This helps you track a wide variety of usage metrics and metadata along with the inputs and outputs of the application.

Let’s first look at the RAG implementation.

We will define three methods:

retrieve: to extract relevant texts as context from the vector store given an input query. (we have one text in our chromadb since this is a simple demo, but you get the idea)generate_completion: to generate an answer given the query and the retrieved context.query: to combine the two previous methods and provide the RAG’s final answer.

For each of these methods, we will add the @instrument decorator to track usage metrics and analyze the input and output.

3️⃣ Create feedback functions

Now that the RAG is created, we need to define the feedback functions that evaluate it.

Feedback functions score the output of the LLM application by analyzing the generated text.

TruLens provides different feedback functions designed for different purposes, but in our case, we’ll only use the three following ones:

✅ Response relevance: to measure the relationship of the final answer to the input question

✅ Context relevance: to measure the relationship between the provided context and the input question.

✅ Groundedness: to check if the answer is grounded in its supplied context

You can learn more about TruLens feedback functions here.

Back to code.

To define the three feedback functions, we first need an LLM. This one can be different from the one we used in the RAG to generate the answer. (We can, for example, pick GPT-4 for evaluation tasks since it’s bigger than GPT-3.5 and shows better reasoning capabilities).

from trulens_eval.feedback.provider.openai import OpenAI as fOpenAI

fopenai = fOpenAI()Each feedback function is then defined separately.

👉 Question / Answer relevance between the overall question (the query argument) and answer (the output):

from trulens_eval import Feedback, Select

from trulens_eval.feedback import Groundedness

f_qa_relevance = (

Feedback(

fopenai.relevance_with_cot_reasons,

name="Answer Relevance",

)

.on(Select.RecordCalls.retrieve.args.query)

.on_output()

)⚠️ Notice that the input question is referenced here as the query argument of the retrieve method defined in the RAG class. If you named this method

fetchfor example, the feedback function would be written as following:f_qa_relevance = ( Feedback( fopenai.relevance_with_cot_reasons, name="Answer Relevance", ) .on(Select.RecordCalls.fetch.args.query) .on_output() )

👉 Question / Statement relevance between the question (the query argument) and each context chunk:

f_context_relevance = (

Feedback(

fopenai.qs_relevance_with_cot_reasons,

name="Context Relevance",

)

.on(Select.RecordCalls.retrieve.args.query)

.on(Select.RecordCalls.retrieve.rets.collect())

.aggregate(np.mean)

)In this example, the context is defined as the returned value of the retrieve method, or simply, with the rets argument.

👉Groundedness:

grounded = Groundedness(groundedness_provider=fopenai)

# Define a groundedness feedback function

f_groundedness = (

Feedback(

grounded.groundedness_measure_with_cot_reasons,

name="Groundedness",

)

.on(Select.RecordCalls.retrieve.rets.collect())

.on_output()

.aggregate(grounded.grounded_statements_aggregator)

)Here’s the full code 👇

Now, we wrap the custom RAG with TruCustomApp and add the list of feedback functions for evaluation.

from trulens_eval import TruCustomApp

tru_rag = TruCustomApp(

rag,

app_id="RAG-einstein:v1",

feedbacks=[

f_groundedness,

f_qa_relevance,

f_context_relevance,

],

)4️⃣ Run the RAG and track metrics

We’re ready to run some questions over the RAG.

To make sure instrumentation is enabled, you must call the query method with the tru_rag context manager.

with tru_rag as recording:

rag.query("Who is Albert Einstein?")Upon running this query, feedback functions and usage metrics are computed. You can visualize them by calling the get_leaderboard method.

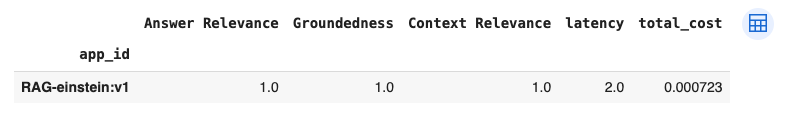

tru.get_leaderboard(app_ids=["RAG-einstein:v1"])We got 1 for every feedback metric. No surprise, our question was easy.

Let’s try another question that goes a bit beyond the provided context.

with tru_rag as recording:

rag.query("Did Einstein contribue to the development of the atomic bomb?")If we display the metrics again, we notice a drop in the average groundedness and the context relevance. That’s normal because the context we’re retrieving for the second query is irrelevant to the question and the answer.

5️⃣ Visualize metrics and metadata in the TruLens dashboard

How about diving into the RAG answers individually to diagnose precisely what happened and understand why metrics were computed as such?

This is where it’s useful to run the run_dashboard method.

If you visit the provided URL, you’ll see a Streamlit app that summarizes your RAG (RAG-einstein:v1).

In the first tab, you’ll see a leaderboard with global averaged metrics:

In the evaluations tab, you’ll get more interesting details: the feedback scores for each query and the corresponding LLM’s answer and reasoning.

Let’s monitor what happened for the second query:

We can first see the RAG’s answer:

Then, we can go over each feedback metric to understand why it got its score:

👉 Answer relevance =1

reason: The response directly addresses the prompt by stating that Einstein did contribute to the development of the atomic bomb. It provides specific details about his indirect contribution through his scientific work and theories, as well as his involvement in urging the United States to develop the bomb.

👉 Context Relevance = 0.2

reason: The statement provides information about Albert Einstein's contributions to science, including his work on the theory of relativity and quantum mechanics. It also mentions his Nobel Prize in Physics for his discovery of the law of the photoelectric effect. However, it does not specifically mention his contribution to the development of the atomic bomb.

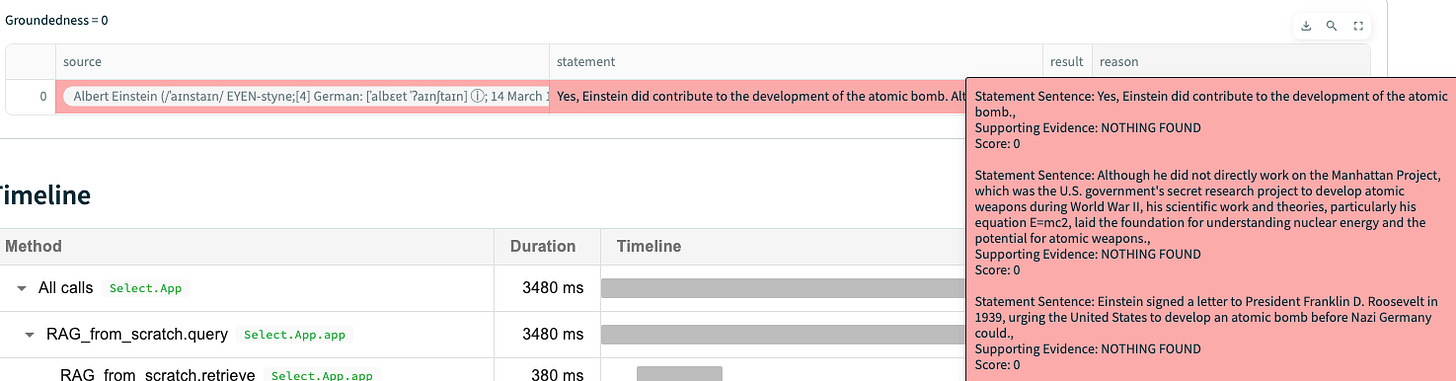

👉 Groundednes = 0

Statement Sentence: Yes, Einstein did contribute to the development of the atomic bomb.,

Supporting Evidence: NOTHING FOUND

Score: 0

Statement Sentence: Although he did not directly work on the Manhattan Project, which was the U.S. government's secret research project to develop atomic weapons during World War II, his scientific work and theories, particularly his equation E=mc2, laid the foundation for understanding nuclear energy and the potential for atomic weapons.,

Supporting Evidence: NOTHING FOUND

Score: 0

Statement Sentence: Einstein signed a letter to President Franklin D. Roosevelt in 1939, urging the United States to develop an atomic bomb before Nazi Germany could.,

Supporting Evidence: NOTHING FOUND

Score: 0

Pretty neat, right?

Conclusion

TruLens is an interesting tool to monitor and diagnose LLM’s behavior. It personally saved me a lot of hassle going through evaluations myself.

Additionally, it provides a useful dashboard that can be deployed and shared with colleagues and stakeholders.

If you found the RAG example we went through quite simple, note that TruLens also integrates with LangChain and LlamIndex when building more complex workflows.

That being said, it’d be all for me today.

Thanks for reading 🙏

Ahmed

When I run the code I meet the error could you please tell me how to solve it

validation error for TruLlama

records_with_pending_feedback_results

Input should be an instance of Queue [type=is_instance_of, input_value=<queue.Queue object at 0x0000023A244C3190>, input_type=Queue]