The Tech Buffet #16: Quickly Evaluate your RAG Without Manually Labeling Test Data

Automate the evaluation process of your Retrieval Augment Generation apps without any human intervention

Hello everyone 👋 Ahmed here. I’m the author of The Tech Buffet, a newsletter that demystifies complex topics in programming and AI. Subscribe for exclusive content.

Today’s topic is evaluating your RAG without manually labeling test data.

Measuring the performance of your RAG is something you should care about especially if you’re building such systems and serving them in production.

Besides giving you a rough idea of how your application behaves, evaluating your RAG provides quantitative feedback that guides experimentations and the appropriate selection of parameters (LLMs, embedding models, chunk size, top K, etc.)

Evaluating your RAG is also important for your client or stakeholders because they are always expecting performance metrics to validate your project.

Here’s what this issue covers:

Automatically generating a synthetic test set from your RAG’s data

Overview of popular RAG metrics

Computing RAG metrics on the synthetic dataset using the Ragas package

PS*: The full code is accessible on a Colab notebook.

PS**: To learn more about RAGs, you can check my previous articles here.

1—Generate a synthetic test set 🧪

Let’s say you’ve just built a RAG and now want to evaluate its performance.

To do that, you need an evaluation dataset that has the following columns:

question (str): to evaluate the RAG on

ground_truths (list): the reference (i.e. true) answers to the questions

answer (str): the answers predicted by the RAG

contexts (list): the list of relevant contexts the RAG used for each question to generate the answer

→ the first two columns represent ground-truth data and the last two columns represent the RAG predictions.

To build such a dataset, we first need to generate tuples of questions and corresponding answers.

Then, in the next step, we need to run the RAG over these questions to make the predictions.

👉 Generate questions and ground-truth answers (the theory)

To generate tuples of (question, answer), we first need to prepare the RAG data, split it into chunks, and embed it into a vector database.

Once the splits are created and embedded, we will instruct an LLM to generate N_q questions from N_c topics to finally obtain N_q x N_c tuples of questions and answers.

To generate questions and answers from a given context, we need to go through the following steps:

Sample a random split and use it as a root context

Fetch K similar contexts from the vector database

Concatenate the texts of the root context with its K neighbors to build a larger context

Use the large

contextandnum_questionsin the following prompt template to generate questions and answers"""\ Your task is to formulate exactly {num_questions} questions from given context and provide the answer to each one. End each question with a '?' character and then in a newline write the answer to that question using only the context provided. Separate each question/answer pair by "XXX" Each question must start with "question:". Each answer must start with "answer:". The question must satisfy the rules given below: 1.The question should make sense to humans even when read without the given context. 2.The question should be fully answered from the given context. 3.The question should be framed from a part of context that contains important information. It can also be from tables,code,etc. 4.The answer to the question should not contain any links. 5.The question should be of moderate difficulty. 6.The question must be reasonable and must be understood and responded by humans. 7.Do no use phrases like 'provided context',etc in the question 8.Avoid framing question using word "and" that can be decomposed into more than one question. 9.The question should not contain more than 10 words, make of use of abbreviation wherever possible. context: {context} """Repeat steps 1 to 4

N_ctimes to vary the context and generate different questions

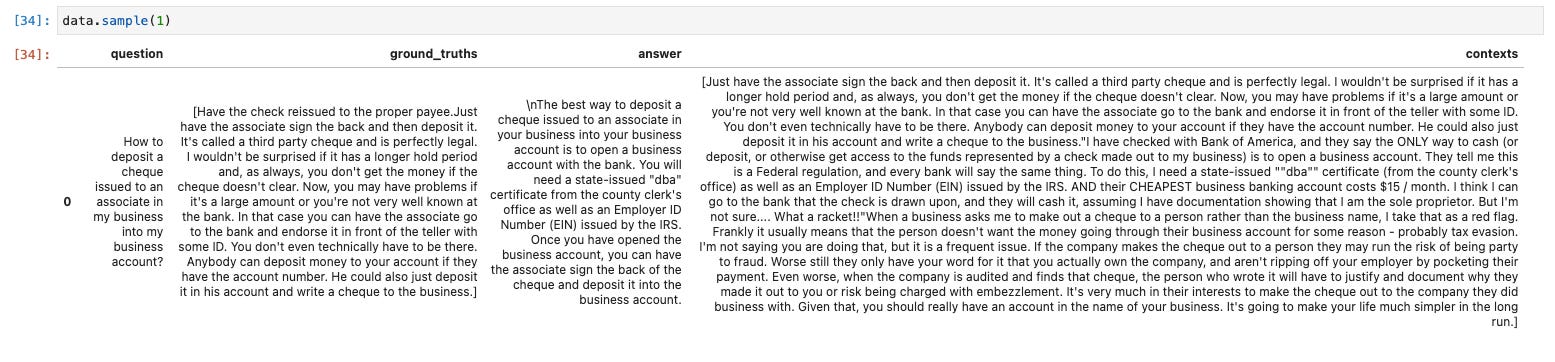

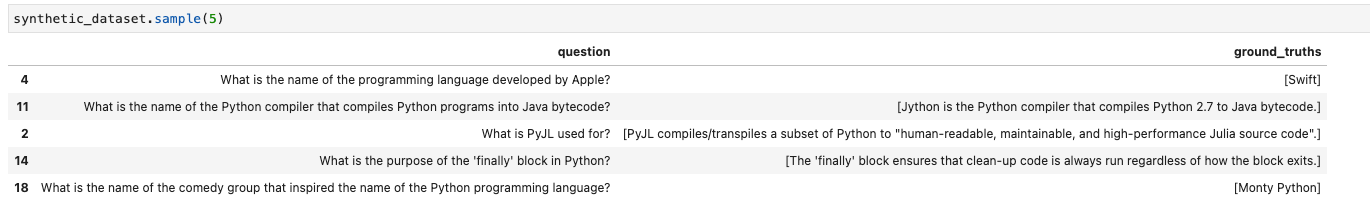

I’ve used this workflow to produce questions and answers on Python programming and here’s a sample of the results I got.

Pretty neat, right? no manual intervention.

👉 Python implementation 💻

Let’s first start by building a vectorstore that includes the data used by the RAG.

We can load that from Wikipedia (but if you have any interesting PDF on Python programming, you can load that too)

from langchain.document_loaders import WikipediaLoader

topic = "python programming"

wikipedia_loader = WikipediaLoader(

query=topic,

load_max_docs=1,

doc_content_chars_max=100000,

)

docs = wikipedia_loader.load()

doc = docs[0]After loading the data, we split it into chunks.

from langchain.text_splitter import RecursiveCharacterTextSplitter

CHUNK_SIZE = 512

CHUNK_OVERLAP = 128

splitter = RecursiveCharacterTextSplitter(

chunk_size=CHUNK_SIZE,

chunk_overlap=CHUNK_OVERLAP,

separators=[". "],

)

splits = splitter.split_documents([doc])Then, we create an index in Pinecone:

import pinecone

pinecone.init(

api_key=os.environ.get("PINECONE_API_KEY"),

environment=os.environ.get("PINECONE_ENV"),

)

index_name = topic.replace(" ", "-")

pinecone.init(

api_key=os.environ.get("PINECONE_API_KEY"),

environment=os.environ.get("PINECONE_ENV"),

)

if index_name in pinecone.list_indexes():

pinecone.delete_index(index_name)

pinecone.create_index(index_name, dimension=768)and use a LangChain wrapper to index the splits’ embeddings in it.

from langchain.vectorstores import Pinecone

docsearch = Pinecone.from_documents(

splits,

embedding_model,

index_name=index_name,

)Now comes the interesting part: generating the synthetic dataset.

To do that, we initialize an object from a TestsetGenerator class using an LLM, the document splits, an embedding model, and the name of the Pinecone index.

from langchain.embeddings import VertexAIEmbeddings

from langchain.llms import VertexAI

from testset_generator import TestsetGenerator

generator_llm = VertexAI(

location="europe-west3",

max_output_tokens=256,

max_retries=20,

)

embedding_model = VertexAIEmbeddings()

testset_generator = TestsetGenerator(

generator_llm=generator_llm,

documents=splits,

embedding_model=embedding_model,

index_name=index_name,

key="text",

)Then, we call the generate method by passing two parameters:

synthetic_dataset = testset_generator.generate(

num_contexts=10,

num_questions_per_context=2,

)Pretty simple, right?

If you’re interested in the implementation details, you can find them in the notebook.

We’re only halfway through it. Now, we need to use the RAG to predict the answers for each question and provide the context list used to ground the response.

The RAG is defined in a RAG class, so let’s initialize it first:

from rag import RAG

rag = RAG(

index_name,

"text-bison",

embedding_model,

"text",

)Then, we iterate over the synthetic dataset by calling the predict method over each question and collect the predictions.

rag_answers = []

contexts = []

for i, row in synthetic_dataset.iterrows():

question = row["question"]

prediction = rag.predict(question)

rag_answer = prediction["answer"]

rag_answers.append(rag_answer)

source_documents = prediction["source_documents"]

contexts.append([s.page_content for s in source_documents])

synthetic_dataset_rag = synthetic_dataset.copy()

synthetic_dataset_rag["answer"] = rag_answers

synthetic_dataset_rag["contexts"] = contextsand here’s what the final result looks like:

🏆 Congratulations if you’ve made it this far, now you’re ready to evaluate your RAG.

2—Popular RAG metrics 📊

Before jumping into the code, let’s cover the four basic metrics we’ll use to evaluate our RAG.

Each metric examines a different facet. Therefore, when evaluating your application, it is crucial to consider multiple metrics for a comprehensive perspective.

Answer Relevancy:

The answer relevance metric is designed to evaluate the pertinence of the generated answer in relation to the provided prompt. Answers that lack completeness or include redundant information receive lower scores. This metric utilizes both the question and the answer, generating values between 0 and 1. Higher scores signify better relevance.

Example❓Question: What are the key features of a healthy diet?

⬇️ Low relevance answer: A healthy diet is important for overall well-being.

⬆️ High relevance answer: A healthy diet should include a variety of fruits, vegetables, whole grains, lean proteins, and dairy products, providing essential nutrients for optimal health.

Faithfulness

This metric evaluates the factual consistency of the generated answer within the provided context. The calculation involves both the answer and the retrieved context, with the answer scaled to a range between 0 and 1, where higher values indicate better faithfulness.

For an answer to be deemed faithful, all claims made in the response must be inferable from the given context.

Example:

❓Question: What are the main accomplishments of Marie Curie?

📑 Context: Marie Curie (1867–1934) was a pioneering physicist and chemist, the first woman to win a Nobel Prize and the only woman to win Nobel Prizes in two different fields.⬆️ High faithfulness answer: Marie Curie won Nobel Prizes in both physics and chemistry, making her the first woman to achieve this feat.

⬇️ Low faithfulness answer: Marie Curie won Nobel Prizes only in physics.

Context precision

Context Precision is a metric that evaluates whether all of the ground-truth relevant items present in thecontextsare ranked higher or not. Ideally, all the relevant chunks must appear at the top ranks. This metric is computed usingquestionand thecontexts, with values ranging between 0 and 1, where higher scores indicate better precision.Answer correctness

This metric measures the accuracy of the generated answer in comparison to the ground truth. This assessment utilizes both the ground truth and the answer, assigning scores within the 0 to 1 range. A higher score indicates a more accurate alignment between the generated answer and the ground truth, indicative of superior correctness.

Example:

🟢 Ground Truth: The Eiffel Tower was completed in 1889 Paris, France.⬆️ High answer correctness: The construction of the Eiffel Tower concluded in 1889 in Paris, France.

⬇️ Low answer correctness: Completed in 1889, the Eiffel Tower stands in London, England.

3—Evaluate RAGs with RAGAS 📏

To evaluate the RAG and compute the four metrics, we can use Ragas.

Ragas (for Rag Assessment), is a framework that helps you evaluate your RAG pipelines.

It also provides a bunch of metrics and utility functions to generate synthetic datasets.

To run Ragas over our dataset, you first need to import the metrics and convert the dataframe of synthetic data to a Dataset object.

from datasets import Dataset

from ragas.llms import LangchainLLM

from ragas.metrics import (

answer_correctness,

answer_relevancy,

answer_similarity,

context_precision,

context_recall,

context_relevancy,

faithfulness,

)

synthetic_ds_rag = Dataset.from_pandas(synthetic_dataset_rag)Then we need to configure Ragas to use VertexAI LLMs and embeddings.

This step is important since Ragas is configured to use OpenAI by default.

metrics = [

answer_relevancy,

context_precision,

faithfulness,

answer_correctness,

answer_similarity,

]

for m in metrics:

m.__setattr__("llm", ragas_vertexai_llm)

if hasattr(m, "embeddings"):

m.__setattr__("embeddings", vertexai_embeddings)

answer_correctness.faithfulness = faithfulness

answer_correctness.answer_similarity = answer_similarityFinally, we call the evaluate function on the synthetic dataset and specify the metrics we want to compute:

from ragas import evaluate

results_rag = evaluate(

synthetic_ds_rag,

metrics=[

answer_relevancy,

context_precision,

faithfulness,

answer_correctness,

],

)Once the evaluation is done, you can print the result directly.

or you can convert it to a dataframe to inspect each of these metrics for every question.

Conclusion

Generating a synthetic dataset to evaluate your RAG is a good start, especially when you don’t have access to labeled data.

However, this solution also comes with its problems.

Some of the generated answers:

May lack diversity

Are redundant

Are plain rephrasing of the original text and need more complexity to reflect real questions that need reasoning

Can be too generic (especially in very technical domains)

To tackle these issues, you can adjust and tune your prompts, filter irrelevant questions, create synthetic questions on specific topics, and use Ragas as well for dataset generation.