The Tech Buffet #9: Let's talk about LLM Hallucinations

Why do they happen? How to quantify them? How to avoid them?

Hey there 👋 this is Ahmed. With The Tech Buffet, I share digestible tips and concepts to help you industrialize stable ML and LLM applications.

This issue is one of a kind. It covers hallucinations 🤡.

Not exactly these hallucinations, but the ones Large Language Models face.

If you’ve ever played with ChatGPT, Bard, Claude, or even one of their open-source brothers (Llama2 or Mistral 7B) you’ve probably noticed these AI systems produce misleading or incorrect information.

This is what the AI community agreed to define as hallucinations.

In this issue, I explain why hallucinations happen, how you can quantitatively evaluate them, and go over the different strategies to mitigate them.

Let’s dig in 🔍

Why do LLMs hallucinate?

When talking about hallucination in the context of LLMs, it’s important to keep in mind that this phenomenon happens not because these systems can “imagine”, “perceive” or “dream”.

First, it's essential to grasp that LLMs are pre-trained to predict tokens. They lack a concept of true or false, instead relying on probabilities for text generation. While this approach enables some unexpected reasoning capabilities, like passing the BAR exam or USMLE, it's a result of their probabilistic, token-by-token reasoning.

Additional training steps, such as instruct tuning and RLHF in modern LLMs, introduce some "bias towards factuality" but don't alter the fundamental underlying mechanism and its limitations.

LLMs draw knowledge from vast sources like the internet, books, Q&A databases, and Wikipedia. Their responses tend to be biased towards what they've encountered most frequently. If you ask a medical question without careful prompting, you might receive an answer aligned with established medical literature or random forum discussions.

Summing up this in two sentences: LLMs hallucinate because they lack reasoning and understanding capabilities. They spit text by extrapolating a prompt and struggle to cite their sources.

How to quantify LLM hallucinations?

Evaluating the LLM hallucinations with a number is important when conducting iterative experiments.

This is where FActScore comes in handy.

FActScore is a modern metric that serves for both human and model-based assessments.

It dissects an LLM's generation into "atomic facts," and the overall score is calculated as the sum of the accuracy of these atomic facts, each holding equal importance.

Accuracy, in this context, is a straightforward binary measure indicating whether the atomic fact is supported by the source. To determine this metric, the authors employ various automation techniques that leverage LLMs and vector stores.

Here’s how the FActScore works in practice. 👇

You first instruct your LLM to generate content. Let’s say, a biography of Bridget Moynahan.

“Bridget Moynahan is an American actress, model and producer. She is best known for her roles in Grey’s Anatomy, I, Robot and Blue Bloods. She studied acting at the American Academy of Dramatic Arts, and …”

Next, you split this text into atomic facts.

Bridget Moynahan is American.

Bridget Moynahan is an actress.

Bridget Moynahan is a model.

Bridget Moynahan is a producer.

She is best known for her roles in Grey’s Anatomy

Then, you verify each fact with an external source (e.g. Wikipedia). Think RAG here.

If the fact is supported by the source, this accounts for 1, otherwise it’s 0.

Bridget Moynahan is American. ✅ → 1

Bridget Moynahan is an actress. ✅ → 1

Bridget Moynahan is a model. ✅ → 1

Bridget Moynahan is a producer.❌ → 0

She is best known for her roles in Grey’s Anatomy ❌ → 0

The final score is therefore (1+1+1)/ 5 = 0.6

Here’s the full example from the original paper👇

How to mitigate hallucinations?

This is a complex problem that you can address from multiple angles.

👉 Product-Level Recommendations:

Hallucinations in Language Models (LLMs) can be a challenge, but with the right strategies, you can minimize their impact. Here are some product-level recommendations to help you navigate this.

These recommendations challenge the way you intend to apply and interact with LLMs.

Purposeful Design: Start by structuring your use case to naturally reduce hallucination risks. For instance, for content generation applications, focus on opinion pieces over factual articles.

Empower User Editability: Allow users to fine-tune AI-generated content. This not only enhances scrutiny but also bolsters content reliability.

User Accountability: Clearly communicate that users bear responsibility for the content generated and published.

Citations and References: Implement a feature that includes citations, providing a safety net for users to verify information before sharing.

Operational Flexibility: Offer various operational modes, such as a "precision" mode that utilizes a more accurate model, albeit at a higher computational cost.

User Feedback Loop: Create a feedback mechanism for users to report inaccuracies, harmful content, or incompleteness. This user data is invaluable for refining the model in future iterations.

Output Control: Mindfully manage the length and complexity of generated responses. Longer and more complex outputs have a higher potential for hallucinations.

Structured Input/Output: Consider implementing structured fields rather than free-form text, particularly useful in applications like resume generation. Predefined fields for educational background, work experience, and skills can be a game-changer.

Addressing hallucinations is crucial for LLMs' responsible use. Implementing these recommendations can enhance user experiences and content reliability.

👉 General prompting guidelines

It’s no secret that adjusting your prompts dramatically improves the answers of your LLM.

Here are some principles I applied that proved to be effective:

Assertive Tone: Improve model compliance with ALL CAPS and highlight key directives.

Context Matters: Provide more background information to anchor the model's responses. For example, attributing a “role” to the LLM generally helps direct the generated text toward a desired response.

Refinement Steps: Review the initial output and make necessary adjustments.

Inline Citations: Ask the model to back its claims with references.

Selective Grounding: Identify situations where grounding is essential versus optional.

Reiteration: Emphasize key instructions at the end of prompts.

Echo Input: Request the model to recap essential input details for alignment.

Algorithmic Filtering: Utilize algorithms to prioritize relevant information.

👉 Chain Of Thoughts (CoT)

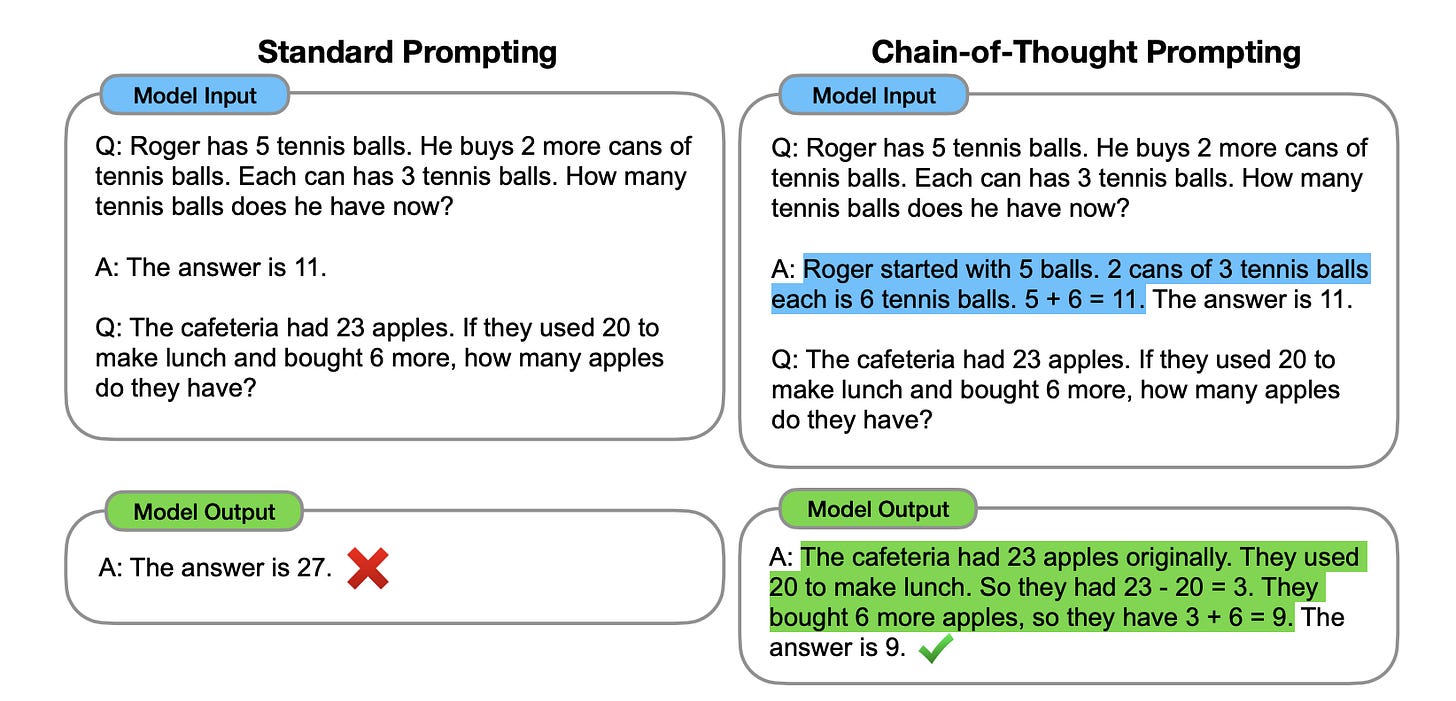

The concept of a 'Chain of Thought' was first introduced in the paper titled 'Chain-of-Thought Prompting Elicits Reasoning in Large Language Models' by researchers at Google.

The underlying principle is straightforward: since Large Language Models (LLMs) are primarily trained to predict tokens rather than engage in explicit reasoning, you can encourage reasoning by providing explicit step-by-step instructions.

Here's a simple example from the original paper:

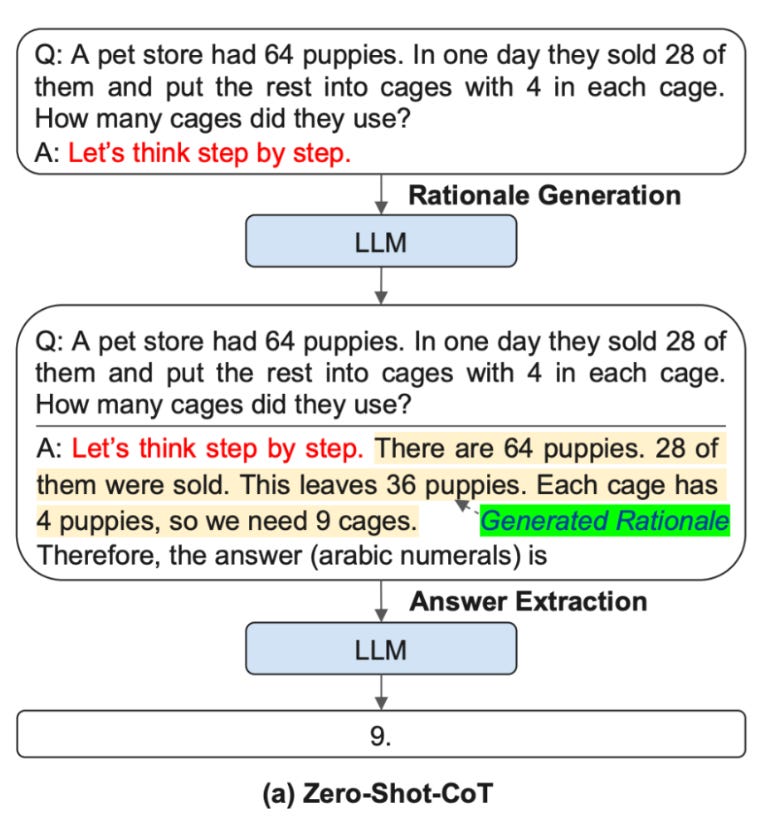

There’s another method to apply CoT called zero-shot CoT where you simply ask the LLM to “think step by step”.

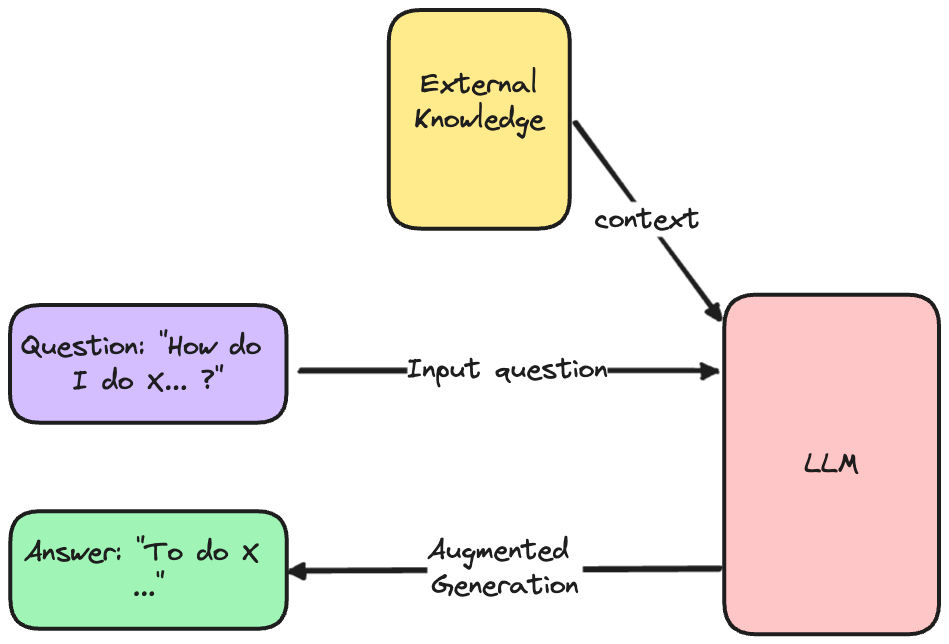

👉 Grounding the answer with RAG

RAG is the acronym for Retrieval Augmented Generation.

The RAG framework is a simple yet powerful framework to mitigate hallucinations. Its primary purpose is to augment an LLM with a factual and verified context drawn from an external database.

Here’s how RAG works:

It first takes an input question and retrieves relevant documents to it from an external database. Then, it passes those chunks as a context in a prompt to help an LLM generate an augmented answer.

That’s basically saying:

“Hey LLM, here’s my question, and here are some pieces of text to help you understand the problem. Give me an answer.”

Hallucinations are what prevent most companies nowadays from deploying LLM-based solutions to production.

To control this issue, it’s important to

Understand that this phenomenon is inherent to the way LLMs are trained. Hallucinations happen from pure statistics, not intrinsic cognitive abilities

Be able to quantitatively evaluate it to conduct experiments efficiently

Mitigate hallucinations with a multi-faceted strategy

I hope this issue addressed these 3 points in the most comprehensive way.

That’d be all for me today.

Until next time 👋