The Tech Buffet #12: Improve RAG Pipelines With These 3 Indexing Methods

The data we retrieve doesn’t have to be the same as the data we used while indexing

Hello there 👋🏻, I’m Ahmed. I write The Tech Buffet to share weekly practical tips on improving ML systems. Join the family (+600 🎉) and subscribe!

In this issue, we’ll explore 3 different ways you can index your data when building RAGs.

These methods are worth experimenting with as they can boost the performance and accuracy.

Let’s see how.

Reminder on data indexing in typical RAGs

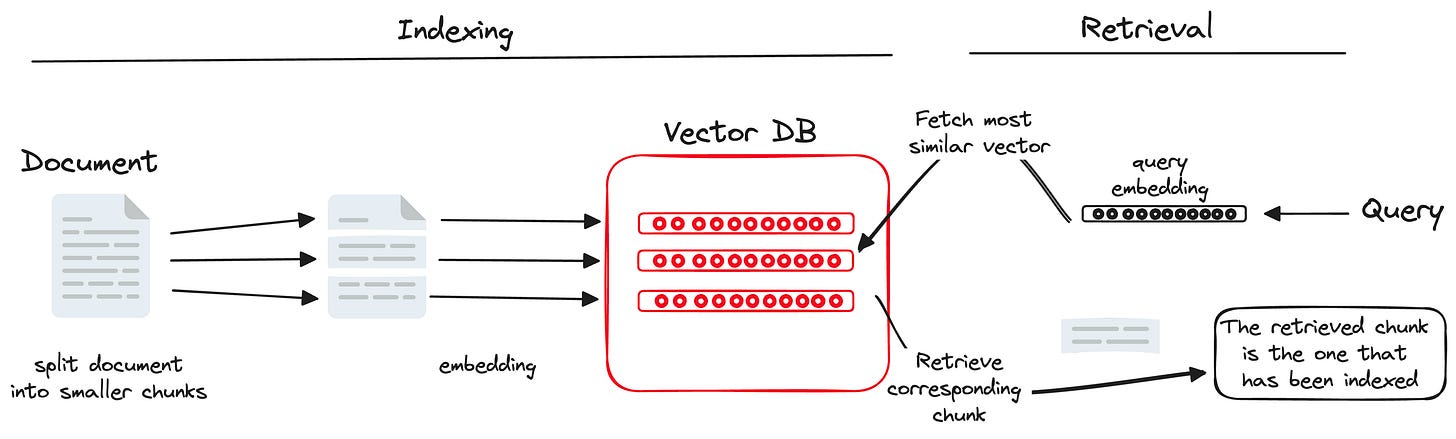

In the default implementation of a RAG system, documents are first split into chunks.

Then, each chunk is embedded and indexed into a vector database.

In the retrieval step, the input query is also embedded and the most similar chunks are extracted.

In this setup, the data (i.e. the chunks) we retrieve is the same as the data we index.

This is the most natural and intuitive implementation.

🚨 However, this doesn’t have to be always the case.

We can index the chunks differently to increase the performance of such systems

the data we retrieve doesn’t have to be the same as the data we used while indexing.

1—Index chunks by their subparts 🧩

Instead of indexing the whole chunk directly, we can split it again into smaller pieces (e.g. sentences) and index it with those multiple times.

Why is this useful?

Imagine dealing with long and complex chunks that discuss multiple topics or conflicting information. Using them in a typical RAG will likely generate noisy outputs with some irrelevant content.

If we separate these chunks into smaller sentences, each sentence will likely have a clear and well-defined topic that matches the user query more accurately.

When retrieving the chunk, this method makes sure to get a relevant context to the query and a broader context (not present in the indexing sentence) that will be useful for the LLM to generate a comprehensive answer.

2—Index chunks by the questions ❓︎they answer

Instead of indexing the chunks directly, we can instruct the LLM to generate the questions they answer and use them for indexing. This is a simple approach.

When submitting the query, the RAG will therefore compare it to the most relevant questions that the data answers. Then, based on these questions, it will retrieve the corresponding chunks.

This indexing method is useful because it aligns the user's query/objective with the core content of the data.

If a user doesn’t formulate a very clear question, such an indexing method can reduce ambiguity. Instead of trying to figure out what chunks are relevant to the user’s question, we directly map it to existing questions that we know we have an answer for.

3—Index chunks by their summaries 📝

This indexing method is similar to the previous one. It uses the chunk summary for indexing instead of the questions it answers.

This is typically useful when the chunks have redundant information or irrelevant details that are not useful to the user’s query.

It also captures the essence of the information that the user is looking for.

That’s it for today!

I hope you’ve enjoyed this issue and learned something useful about improving RAG indexing.

If you know about other indexing methods that improve RAG systems, please tell me about them (in the comments or via a reply to this email).

Interested in building RAGs for your company? Have a look at my next issue where I cover a curated list of top articles from the industry.

Until next time! 👋

Ahmed

Great post. So what's the best way to store and retrieve the big text chunks if the vector database contains only the small parts? Do you use a separate DB for this? Thanks!