The Tech Buffet #6: Why Your RAG is Not Reliable in Production

And how you should tune it properly 🛠️

Hello there 👋🏻, I’m Ahmed. I write The Tech Buffet to share weekly practical tips on productionizing ML systems. Join the family (+400 🎉) and subscribe!

With the rise of LLMs, the Retrieval Augmented Generation (RAG) framework also gained popularity by making it possible to build question-answering systems over data.

We’ve all seen those demos of chatbots conversing with PDFs or emails.

While these systems are certainly impressive, they might not be reliable in production without tweaking and experimentation.

In this issue, we explore the problems behind the RAG framework and go over some tips to improve its performance.

These findings are based on my experience as an ML engineer who’s still learning about this tech.

RAG in a nutshell ⚙️

Let's get the basics right first.

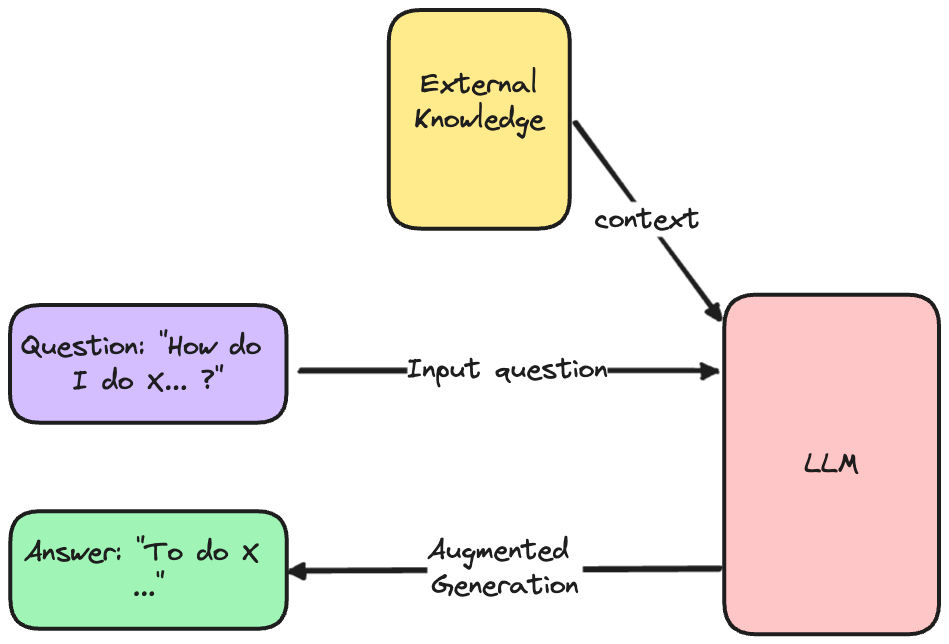

Here’s how RAG works:

It first takes an input question and retrieves relevant documents to it from an external database. Then, it passes those chunks as a context in a prompt to help an LLM generate an augmented answer.

That’s basically saying:

“Hey LLM, here’s my question, and here are some pieces of text to help you understand the problem. Give me an answer.”

You should not be fooled by the simplicity of this diagram.

In fact, RAG hides a certain complexity and involves the following components behind the scenes:

Loaders to parse external data in different formats: PDFs, websites, Doc files, etc.

Splitters to chunk the raw data into smaller pieces of text

An embedding model to convert the chunks into vectors

A vector database to store the vectors and query them

A prompt to combine the question and the retrieved documents

An LLM to generate the answer

If you like diagrams, here’s another for you.

A tad more complex but it illustrates the indexing and retrieval processes.

Phew!

Don’t worry though, you can still prototype your RAG very quickly.

Frameworks like LangChain abstracted most of the steps involved in building a RAG and it became easy to prototype those systems.

How easy is that? 5-line-of-code easy.

Of course, there’s always a problem with the apparent simplicity of such code snippets: depending on your use case, they don’t always work as-is and need very careful tuning.

They’re great as quickstarts but they’re certainly not a reliable solution for an industrialized application.

The problems with RAG

If you start building RAG systems with little to no tuning, you may be surprised.

Here’s what I noticed during the first weeks of using LangChain and building RAGs.