The Tech Buffet #17: 9 Effective Techniques To Boost Retrieval Augmented Generation (RAG) Systems

ReRank, Hybrid Search, Query Expansion, Leveraging Metadata and more...

Hello everyone 👋 Ahmed here. I’m the author of The Tech Buffet, a newsletter that demystifies complex topics in programming and AI. Subscribe for exclusive content.

2023 was, by far, the most prolific year in the history of NLP. This period saw the emergence of ChatGPT alongside numerous other Large Language Models, both open-source and proprietary.

At the same time, fine-tuning LLMs became way easier and the competition among cloud providers for the GenAI offering intensified significantly.

Interestingly, the demand for personalized and fully operational RAGs also skyrocketed across various industries, with each client eager to have their own tailored solution.

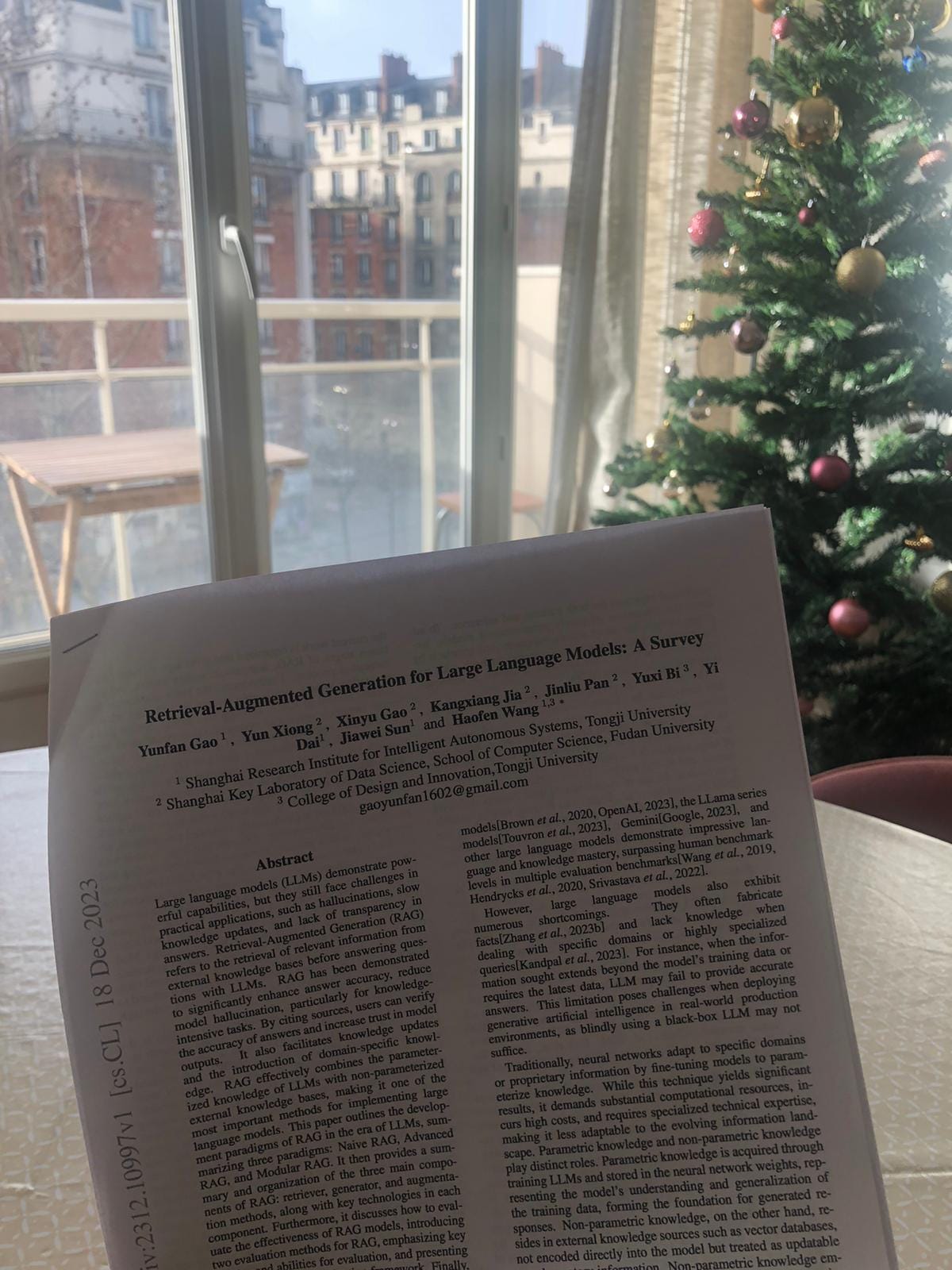

Speaking of this last point, creating fully functioning RAGs, in today’s issue we will discuss a paper that reviews the current state of the art of building those systems.

I started reading this piece during my vacation

and it’s a must.

It covers everything you need to know about the RAG framework and its limitations. It also lists modern techniques to boost its performance in retrieval, augmentation, and generation.

The ultimate goal behind these techniques is to make this framework ready for scalability and production use, especially for use cases and industries where answer quality matters *a lot*.

I won’t discuss everything in this paper, but here are the key ideas that, in my opinion, would make your RAG more efficient.

1—🗃️ Enhance the quality of indexed data

As the data we index determines the quality of the RAG’s answers, the first task is to curate it as much as possible before ingesting it. (Garbage in, garbage out still applies here)

You can do this by removing duplicate/redundant information, spotting irrelevant documents, and checking for fact accuracy (if possible).

If the maintainability of the RAG matters, you also need to add mechanisms to refresh outdated documents.

Cleaning the data is a step that is often neglected when building RAGs, as we usually tend to pour in all the documents we have without verifying their quality.

Here are some quick fixes that I suggest you go through:

Remove text noise by cleaning special characters, weird encodings, unnecessary HTML tags… Remember that old NLP techniques using regex? You can reuse them.

Spot document outliers that are irrelevant to the main topics and remove them. You can do this by implementing some topic extraction, dimensionality reduction techniques, and data visualization.

Remove redundant documents by using similarity metrics

2—🛠️ Optimize index structure

When constructing your RAG, the chunk size is a key parameter. It determines the length of the documents we retrieve from the vector store.

A small chunk size might result in documents that miss some crucial information while a large chunk size can introduce irrelevant noise.

Coming up with the optimal chunk size is about finding the right balance.

How to do that efficiently? Trial and error.

However, this doesn’t mean that you have to make some random guesses and perform qualitative assessments for every experience.

You can find that optimal chunk size by running evaluations on a test set and computing metrics. LlamaIndex has interesting features to do this. You can read more about that in their blog.

3—🏷️ Add metadata

Incorporating metadata with the indexed vectors helps better structure them while improving search relevance.

Here are some scenarios where metadata is useful:

If you search for items and recency is a criterion, you can sort over a date metadata

If you search over scientific papers and you know in advance that the information you’re looking for is always located in a specific section, say the experiment section for example, you can add the article section as metadata for each chunk and filter on it to match experiments only

Metadata is useful because it brings an additional layer of structured search on top vector search.

4—↔️ Align the input query with the documents

LLMs and RAGs are powerful because they offer the flexibility of expressing your query in natural language, thus lowering the entry barrier for data exploration and more complex tasks. (Learn here how RAGs can help generate SQL code from plain English instructions).

Sometimes, however, a misalignment appears between the input query the user formulates in the form of a few words or a short sentence, and the indexed documents, which are often written in the form of longer sentences or even paragraphs.

Let’s go through an example to understand this.

Keep reading with a 7-day free trial

Subscribe to The Tech Buffet to keep reading this post and get 7 days of free access to the full post archives.