The Tech Buffet #2: How To Use LangChain to Perform Question Answering Over Documents

A step by step tutorial on data loading, chunking, embedding and answer generation

Hello there 👋, I am Ahmed, your host at The Tech Buffet.

In this newsletter, I deliver weekly practical tips and tutorials on programming and machine learning. My goal is to arm you with the right tools to build your AI products fast and in a reliable fashion.

Consider subscribing to access exclusive (upcoming) material including notebooks, scripts, and code reviews.

In the previous issue, we went through the architecture behind a question-answering system that provides answers from private documents.

In this post, we’ll turn this architecture into actual Python code that you can start from and adapt to your projects.

We’ll use the Langchain framework to orchestrate the various involved components.

Let’s have a look!

PS: All the code is available on my repo. Check it out to run it on your local machine.

Before we dive in, here’s the full architecture of the app:

The data we’ll experiment with today will be the first chapter of this book Effective Python: 90 Specific Ways to Write Better Python

The book will be loaded in a PDF format.

The script will hopefully answer questions about Python tips and best practices (and maybe make us better developers 😉).

PS: You can of course replace this book with anything you want.

👉 Load the document

Let’s start by loading the PDF file page by page and keep the pages we’re interested in (23–63).

👉 Split each page into chunks

Let’s split each page into 1024-character chunks with an overlap of 128 characters between chunks. (Having overlaps between chunks later helps construct the full context over a long piece of text)

👉 Embed chunks and index their embedding into a Chroma database

These two steps are combined in one command Chroma.from_documents .

👉 Generate the answer

A lot of things happen in these lines of code, as Langchain abstracts many underlying tasks:

We define a custom prompt with the

contextand thequestion(just like we showed in the diagram)We instantiate a Chat LLM based on the

gpt-3.5-turbomodelWe define a

RetrievalQAchain that uses the Chroma vector store as a retriever and the custom prompt as an additional argument

When we run qa_chain over the question, the magic happens 🌟: the query is embedded, similar chunks are extracted from the retriever and then added to the prompt, and finally, the LLM runs over the prompt to produce the answer.

Demo 🚀

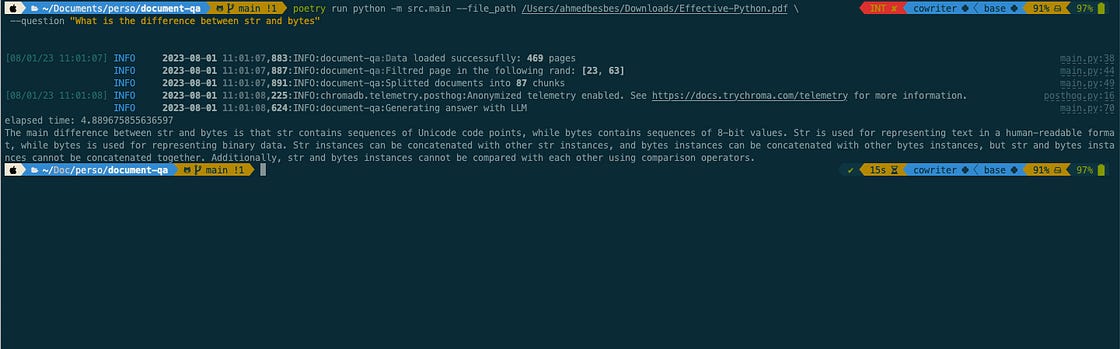

Let’s give the script a try and ask the following question:

What is the difference between str and bytes?

And the answer is …

The main difference between str and bytes is that str contains sequences of Unicode code points, while bytes contains sequences of 8-bit values. Str is used for representing text in a human-readable format, while bytes is used for representing binary data. Str instances can be concatenated with other str instances, and bytes instances can be concatenated with other bytes instances, but str and bytes instances cannot be concatenated together. Additionally, str and bytes instances cannot be compared with each other using comparison operators.

The full code is available here.

In this post, we went over a starter Python implementation using Langchain to easily orchestrate the different components of a question-answering system.

There’s definitely more work to make the solution more accurate, stable, and scalable. Check out the Langchain documentation to learn more.

How do you know the answer is based on the book content and not solely on the LLM training data